Dado Ruvic / Reuters

YouTube’s channel recommendations have helped "unite the far-right" on the platform and actively promote channels that peddle conspiracy theories, according to new research.

Jonas Kaiser and Adrian Rauchfleisch’s research paper, "Unite the Right? How YouTube’s Recommendation Algorithm Connects the U.S. Far-Right," is the first large-scale analysis of the channels that YouTube automatically recommends users subscribe to and visit. They conclude that the channel recommendation algorithm pushes users to more fringe and extreme channels, such as Alex Jones of Infowars, while also elevating far-right voices above others.

"While watching one of Alex Jones' videos won't radicalize you, subscribing to Alex Jones' channel and then, maybe, to the next set of recommendations that YouTube throws your way might. These subscriptions, then, fill your front page and influence the way you perceive new topics," they said.

YouTube automatically generates channel suggestions under the Related Channels sidebar on the main page of an account. A channel owner also has the option to add their own curated list of recommended channels above the list generated by YouTube’s algorithm.

The researchers found that the networks of YouTube-suggested channels within the mainstream and among the conservative and far right are much larger than their corresponding ones on the left and far left.

A source familiar with YouTube’s recommendation algorithm told BuzzFeed News that just as a user might start on a mainstream channel and end up being recommended a fringe channel at some point, it also works the other way. They said the algorithm can point someone on a fringe channel toward more mainstream content.

YouTube's recommended channels for Fox News.

YouTube

The world’s biggest video website is already facing scrutiny and criticism for the way its video recommendation algorithm automatically surfaces fringe and conspiracy content for users. Writing in the New York Times recently, techno-sociologist Zeynep Tufekci said the video recommendation algorithm “promotes, recommends and disseminates videos in a manner that appears to constantly up the stakes. Given its billion or so users, YouTube may be one of the most powerful radicalizing instruments of the 21st century.”

Kaiser and Rauchfleisch found the channel recommendation algorithm can have the same effect.

To conduct their research, they began with an initial set of 1,356 mainstream, liberal, and conservative YouTube channels, and those run by official political parties. Then, they crawled those channels’ manually suggested recommendations to bring in an additional 2,977 channels. That created an initial data set of 4,333 channels. They used a programming script to identify and follow the algorithmically generated channel recommendations for these channels, and for two subsequent steps of recommendations.

In total, they built a list of more than 13,500 channels and their relationships to each other. (Their paper contains a more detailed breakdown of the channels and methodology.)

Then, in an analysis conducted specifically for BuzzFeed News, they followed the algorithm’s recommendations outward from the 250 most popular channels on YouTube. (That list of 250 channels came from socialblade.com’s rankings.) In one example, a user starting on a large mainstream channel such as TEDx ended up being suggested the channel of conspiracy theorist Alex Jones after just three steps of recommendations. In this case, the recommendations on the TEDx channels page listed CNN, which in turn suggested Fox News, which led to Alex Jones.

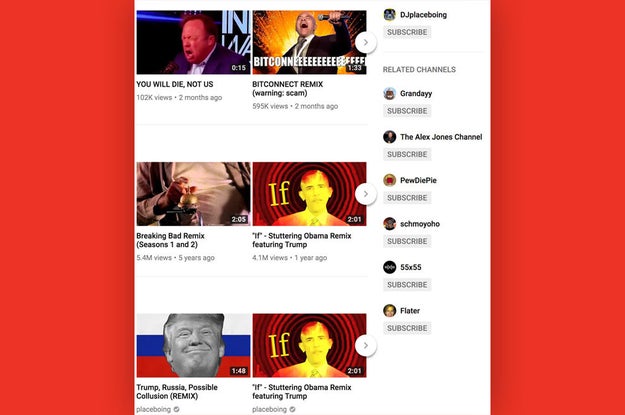

Kaiser and Rauchfleisch found that channels with little or no political content, such as one that creates video remixes and mashups, saw the algorithm suggest conspiracy channels like Jones' and accused Holocaust-denier Styxhexenhammer666. The recommendations also included PewDiePie, the biggest channel on YouTube.

The recommended channels for Placeboing, a YouTuber who makes video remixes and mashups.

YouTube

"So here we can see the step from music to music/remixes/memes to the far-right," Kaiser, a fellow and affiliate at the Berkman Klein Center for Internet & Society at Harvard University, told BuzzFeed News.

YouTube’s channel recommendations change daily and are not personalized for users, according to information from the company. This means all users are given the same channel recommendations in the Related Channels sidebar. A person familiar with the recommendation algorithm told BuzzFeed News that channel recommendations are based on data gathered about the channels users consume in the same session, as well as topic overlap.

Given these factors, Kaiser said this means channel recommendations “are more stable, less prone to personalization and virality” than YouTube’s video recommendations.

In their paper, Kaiser and Rauchfleisch, an assistant professor at the Graduate Institute of Journalism at the National Taiwan University, said channel recommendations also reveal connections between YouTube accounts that may not otherwise be apparent. They surface the relationships that exist between channels due to audience overlap, which in turn helps create networks of channels with similar biases.

"These channels exist, they interact, their users overlap to a certain degree. YouTube’s algorithm, however, connects them visibly via recommendations," they write.

Kaiser and Rauchfleisch highlighted these connections by visualizing their network of more than 13,500 channels. They labeled different universes of channels that exist in tight recommendation networks, and this is the result:

Jonas Kaiser and Adrian Rauchfleisch / Via medium.com

Political YouTube channels occupy the bottom left of the visualization, where networks of conservative, mainstream, progressive, far-right, and far-left channels exist. However, Kaiser said they are not equal in terms of the size and tightness of their networks. The far right has many more subscribers and channels than the far left.

"Most notably, the far-right is just much more active on YouTube and are very aware of the other prominent channels,” Kaiser said. “They do, for example, respond to other far-right videos or invite each other on their shows. This contributes to a sense of collective identity. Furthermore, they attract a larger audience."

They also found that Alex Jones’ channel has a prominent place within the political communities it identified in the data.

"Within the political communities, Alex Jones’ channel is one of the most recommended ones … it connects the two right-wing communities," they write.

Jonas Kaiser and Adrian Rauchfleisch / Via medium.com

The result, according to the paper, is that channel recommendations connect those "that might be more isolated without the influence of the algorithm, and thus helps to unite the right" by potentially pushing users to visit and subscribe to channels they may not otherwise know about.

In contrast, Kasier told BuzzFeed News, the far-left "is small, less active, and fans out into different communities like communists, anarchists, socialists, etc., that might not even like each other too much."

Kaiser said YouTube needs to be aware of how its channel recommendations can push people to the extremes, or create the impression that fringe channels on the right and left are in fact part of the mainstream.

"So it might happen that Infowars is just part of what people might think of [as] ‘normal’ on YouTube because they’ve seen it recommended again and again," he said.

Kaiser is also concerned about the filter bubble effects of recommendations when it comes to far-right channels.

"Once you’ve entered the bubble, YouTube keeps throwing these recommendations to other conspiracy and/or far-right channels at you. And this might have a deeper impact in the long run," he said.

from BuzzFeed - USNews https://ift.tt/2qrYCtc

No comments:

Post a Comment